LangOps gives localization new wings to fly in the AI Era

LangOps doesn’t replace localization. Instead, it gives localization new wings to fly in the AI era.

Background

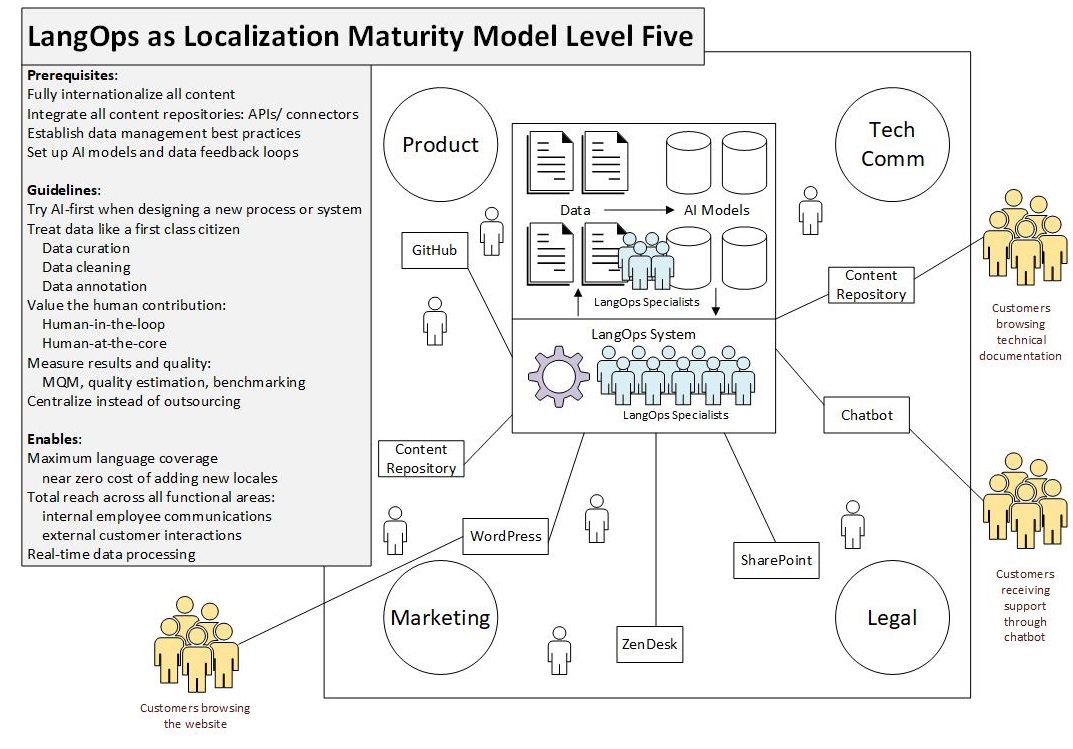

The twelve principles of LangOps give localization teams the roadmap and tools for advancing up Common Sense Advisory’s Localization Maturity Model. By the time an organization reaches localization maturity level five, it is no longer practicing localization, but LangOps. At that point, language has been fully integrated into the DNA of the company.

Before the advent of AI, localization teams often struggled to move beyond level 3 on the localization maturity model, where multilingual content is managed but not measured, optimized, or fully integrated into the enterprise. Reaching level 4 required expensive analytics platforms and the challenging task of onboarding the entire organization. Advancing to level 5 required the effective evangelization of localization at the highest echelons of executive leadership. However, if executives relegated localization to just the product department, the goal of enterprise-wide impact remained out of reach.

LangOps, with AI in one hand and twelve guiding principles in another, can help enterprise localization teams break through the glass ceiling of level 3 in the localization maturity model. Who knew that LLMs could be the key to ascending the LMM?

Below are the twelve principles of LangOps from langops.org, annotated with my comments, in which I intend to answer two questions: 1) how does LangOps fundamentally and definitionally differ from localization? and 2) How can adopting a LangOps strategy advance a company up the Localization Maturity Model scale?

Understand all customers

Our highest priority is to understand every customer no matter what language they use and expand our reach to the widest audience possible.

Localization, in its traditional sense, is often limited by the resources needed for human translation and the complexity of managing multiple languages independently. Conventional localization processes frequently prioritize only the most economically viable languages due to the significant time, cost, and effort involved in manually translating and localizing content. Each additional language introduces a layer of complexity, requiring specialized translators, subject matter experts, and quality assurance processes to ensure accuracy and cultural relevance.

In contrast, LangOps stakes out the largest linguistic territory possible for language services. LangOps is uniquely justified in this claim because its AI-first (principle 4) and language-agnostic approach (principle 9) reduces the marginal cost of adding another language to near-zero. When an organization is fully language-agnostic (internationalization), expanding beyond the top economically viable languages to long tail languages is more feasible.

Support all customer facing functions

We initially focus on the most urgent needs, but promote organizational progress and architect solutions which can eventually support all customer interactions.

LangOps stakes out the largest operational territory possible for language services, encompassing all the ways a customer interacts with a brand, a product, or a service. Customers can be internal as well! LangOps services internal customers of a global business by powering internal multilingual communications. In contrast, localization has often been constrained to the product department and has been limited to servicing external customers only.

LangOps is a rallying cry across the organization to make all internal and external communication multilingual and AI-powered. The enterprise-wide usage of centralized, trained LLMs is what gives LangOps the edge over traditional localization in aims to centralize. Spence Green comments: “What we see across enterprises right now is a trend toward centralization of tools and vendors. AI will amplify centralization as models are most valuable when they have access to enterprise data. Therefore, enterprise data fragmentation across tools and vendors is strategically not preferred.”

The aim to integrate localization as a core function across the whole enterprise has previously been encoded in level 5 of CSA’s localization maturity model (LMM). Once a distant dream for most enterprises, LangOps puts a level 5 LMM ranking within reach.

Embrace data-centric AI

Data is the key to performant AI systems for language. We collect, create, structure, maintain, and leverage textual data to deliver efficient solutions.

LangOps treats data as a first-class citizen. That means recognizing that in the AI paradigm, data services bring more value to an enterprise’s operations than ever before. AI developers are constantly in search of data. And localization departments have it in abundance! What’s more, this data can be augmented, cleaned, and synthesized for even better results. These are a few of a long list of data services that an enterprise might engage in. A localization program that doesn’t include data services does not capitalize on opportunities to add value to the enterprise’s AI systems.

The traditional definition of localization often hampers the effective use of data across the entire enterprise by treating linguistic tasks as isolated from broader data-centric operations. Localization departments typically focus narrowly on translating and adapting content for specific markets, often without integrating their rich repositories of linguistic data into the organization’s overarching data strategy. Consequently, this siloed approach prevents the enterprise from fully leveraging valuable textual data for AI training, optimization, and continuous feedback loops. By not capitalizing on these data services, organizations miss out on opportunities to enhance AI performance, streamline operations, and drive smarter, data-informed decisions across various functions, from marketing to customer support.

Try AI first

AI systems are already good enough for many use cases and their performance will continue to improve. We always start by trying AI solutions to solve language problems.

Localization as a concept doesn’t fully embrace the current and future potential of AI. Traditional localization relies heavily on human effort to translate and adapt content, making it slow, expensive, and hard to scale. This old method misses out on the huge benefits AI offers. By not adopting AI-first strategies, traditional localization struggles to keep up.

As the linguistic quality of AI output climbs, it’s becoming harder to justify its exclusion across an ever-narrowing set of content types. And AI-skeptics should keep in mind that direct content translation is not the only useful application of AI. I’ve even used AI to help brainstorm rhyming phrases when translating poetry. In other words, no matter what the situation or content type, there’s a role for AI to play, whether adapting style, tone, terminology, conducting QA checks, synthesizing multiple linguistic inputs from TMs, terminology and MT, and serving as a brainstorming tool.

In terms of content translation, AI-translated content is often already fit for purpose. And if it’s not, it’s a good starting point for human revision. That means process planners should try AI first. If it’s not suitable, at least they will know exactly what the human involvement should entail and how to improve the AI output moving forward.

Assess quality of AI

Quality is the pillar for trust in AI. Everything we create is proactively assessed through the lens of quality, so we can act before errors arise.

Long gone are the days when localization quality and impact could not be measured. We live in a data-driven world, where browsers track each subtle mouse movement and click, aggregating the data into valuable insights for advertisers and content designers. Likewise, the localization industry has never before had such a wealth of tools available to assess quality. The creation of the MQM framework was a great leap forward for our industry, allowing content designers to define what quality means for themselves, then measure it and benchmark it. Dashboards of linguistic quality are now a must-have feature in any enterprise localization system.

The rapid evolution of quality estimation thanks to the advent of LLMs has supercharged efforts to measure quality. QE metrics like COMET, with their ability to reliably estimate quality in absence of a reference translation have changed the game. LLM-driven metrics like GEMBA-MQM are beating all other previous QE metrics, even without a reference translation. In addition to producing a quantitative score of quality on a scale from 0-100, these models can also identify specific linguistic errors, categorize them using the MQM model, and estimate their severity. Previously, this qualitative assessment of quality was only possible thanks to the tireless efforts of human LQA reviewers.

The aim to quantify, track, and improve quality has long been a tenet of level 4 of CSA’s localization maturity model. LangOps, with its data-driven approach to both AI inputs and outputs, puts enterprises on a fast-track to achieving this challenging level of localization maturity.

Value human contribution

We value the human contribution that constantly improves our data and thus machines.

An AI-first approach recognizes that AI models are in constant need of training and improvement. That can only come through new training data, which comes from the human contribution. Any time a human performs a process, data is generated. For example, post-editing creates a triplet, consisting of the source text, the machine translation, and the post-edited machine translation. These triplets are used in re-training the system to produce better outputs. The human contribution is also essential in other forms of data services: data curation, data annotation, and data cleaning.

Although AI will find a role to play in every process, human oversight over those processes is still essential. A language manager in each operational language will still prove indispensable in ensuring acceptable quality from AI-driven systems and recommending updates to improve quality.

Common Sense Advisory modifies the buzzphrase human-in-the-loop (HITL) to human-at-the-core, recognizing that humans are actually moving deeper into language processes. “The fact that human contributions are increasing in value argues against a simple replacement theory.”

Expect transparency, control and scalability

We create scalable, transparent, easy-to-use systems. We can control and change the systems to improve the process.

The early neural machine translation systems introduced around 2015 presented a challenge in terms of transparency and control. Unlike their statistical predecessors, which relied on explicit rules and probabilities, NMT models often operated as black boxes, making it difficult to understand the exact process by which they generated translations. Of course, workarounds have been made since then.

The emergence of LLMs marked a significant advancement in natural language processing. While LLMs, like other neural networks, inherently exhibit stochastic behavior, the ability of users to influence their output through techniques such as prompt engineering, fine-tuning, and retrieval augmented generation has substantially enhanced their control and predictability. These methods can mitigate, to some degree, the inherent randomness of LLM responses.

Too often, traditional translation management systems come with assumptions that users’ workflows are only linear: translation, revision, review. Modern enterprises understand that’s far from true. That’s why, as a long-time WorldServer user, I’ve appreciated the sandbox approach to workflows it offers. Arrange any sequence of automatic and human actions in any order and the system will execute it flawlessly. Logical triggers can be built in, and the system can integrate with third-party repositories and cloud storage. Automatic actions can execute API calls to third party-services like LLMs. It’s the most flexible workflow designer I’ve seen in a traditional TMS.

But WorldServer is not for everyone, and neither is its price tag. The emergence of workflow orchestrators like Blackbird.io signals that customers are tired of being boxed-in by workflow limitations in commercial TMSs. Conversations about the hypothetical features of a “Post-TMS” are attracting eyeballs: the Post-TMS is “headless,” “API-first”, and “non-linear,” affording users maximum transparency, control, and scalability.

Level 4 of CSA’s localization maturity model enshrines these values, but it’s LangOps, with highly-customizable LLMs and post-TMS that most effectively realizes these goals.

Process data in real time

The world is moving to dynamic content creation. We build with real time at the core.

Before AI achieved widespread adoption, one effective method for achieving as close as possible to real-time language services was to implement the “follow-the-sun” model, employing a range of professionals around the globe that could handle any request in any language at any time. The follow-the-sun model was based on the assumption that language-specific human specialists were doing all the work, and that the work must be scheduled around their working hours in their timezone.

In the AI era, that assumption no longer holds true. To a large degree, real-time delivery can be accomplished outside the typical 9-5 of language specialists in specific locales. Just consider the proliferation of multilingual chat bots that serve as an initial filter to support tickets, recommending from the knowledge base answers to questions that already have a solution. Consider all the eBay product titles, descriptions, and specifications are translated in real-time. Consider all the TripAdvisor reviews that are translated in real-time into a myriad of locales.

Of course, real-time processing of user generated data has going on for 10 years before the advent of ChatGPT, using statistical and neural MT. But does the term “localization” encompass these activities? I believe LangOps, with its focus on real-time data processing, more effectively embodies these activities, and that eBay and TripAdvisor were practicing LangOps even before they knew what to call it.

Build language-agnostic

We design all processes and supporting systems with ease of scaling to new languages. We dynamically integrate language into our products without any hardwiring.

In traditional language services, the ability to scale to new languages comes as a result of effective internationalization. If a specific language is hardwired or hardcoded into a product, this increases the cost of scaling to new languages. Localization professionals must think of ways to adapt the locale-specific elements that have been built into the product. In contrast, a fully internationalized product is ready to scale to additional languages with no additional effort.

Although localization benefits from internationalization, localization as a concept and activity stands distinct from internationalization. LangOps, on the other hand, integrates internationalization as a key activity with this principle: build language-agnostic.

Promote interdisciplinary knowledge

We train LangOps in the different areas required to be efficient. We continuously enable teams to evolve and learn new skills.

LangOps professionals need to have a multidisciplinary skill set, encompassing everything from linguistic proficiency and cultural sensitivity to expertise in AI technologies and data science. They must understand machine learning frameworks, data annotation processes, and quality evaluation metrics, all while maintaining a deep understanding of the markets and customer behaviors for each language segment. This holistic expertise is crucial as LangOps integrates itself across diverse operational areas of an enterprise, from customer support and marketing to product development and HR communications. Such interdisciplinary expertise ensures LangOps can provide the tailored and scalable solutions needed to engage users effectively across varying geographies and languages.

This interdisciplinary approach differs from traditional localization, which primarily focuses on adapting content for a specific locale rather than integrating into a variety of business functions. While localization often works in silos, addressing language adaptation as an isolated task, LangOps embraces a cross-functional methodology, aiming to embed linguistic capabilities into the fabric of the entire enterprise.

Leverage available data and tech

We make use of available tools and data collections. We strictly account for total cost of ownership in buy or build situations.

LangOps thrives on standing on the shoulders of giants by leveraging existing open-source technologies and public datasets. Using established tools like pre-trained language models, open-source workflow orchestrators, and existing linguistic data repositories, LangOps can achieve advanced functionality without incurring prohibitive costs. The principle of total cost of ownership ensures that any decision to build custom solutions is weighed against the potential benefits and longevity, prioritizing smart investments over vanity projects.

This approach can be distinguished from traditional localization, which might heavily invest in bespoke translation tools or proprietary databases without as broad an integration perspective. By leveraging communal advancements and shared resources, LangOps allows for more efficient scaling and innovation.

Be at the forefront

We invest time to research and study academic and commercial advances. We future proof LangOps by constantly pushing the boundaries.

LangOps maintains a forward-looking focus by continuously exploring cutting-edge technologies and methodologies in both academic and commercial spheres. This commitment to research ensures that LangOps is not merely reactive but proactively evolves in anticipation of industry shifts and technological advancements. Whether through partnerships with academic institutions for groundbreaking research or pilot projects with new commercial tech providers, LangOps aims to stay ahead of the curve, ensuring that the enterprise is not only up-to-date but also a leader in the linguistic and AI space.

This progressive mentality sets LangOps apart from traditional localization, which has often been slower to adopt new technologies and practices, primarily due to its compartmentalized role within organizations. LangOps breaks this mold by prioritizing innovation and continuous improvement, ensuring it remains at the cutting edge of both AI and language services.